The concept of microservices, or microservice architecture, is a software development technique that involves breaking down an application into loosely coupled services. By developing applications as independent, small services, each running a process and communicating through lightweight mechanisms to achieve business goals, the microservice architecture allows for deploying large and complex applications. Additionally, it helps organizations evolve and expand their technology stack. The microservice architecture pattern language includes patterns for implementing the microservice architecture. Amazon and Netflix are some real-life applications that use micro-service architecture successfully. Meanwhile, Kubernetes presents a complete resolution for handling various containers over various hosts. It provides a container-centric management environment that is particularly well-suited for deploying microservices, including those based on Docker containers. Kubernetes is designed to manage container-based applications, making it suitable for building microservices and migrating existing applications into containers for easier management and deployment.

Microservices have gained popularity for building and deploying large and complex applications in 2023. The service involves breaking down applications into independent components that can be developed, tested, and deployed separately, promoting agility and allowing teams to respond promptly to changing business requirements. Despite its benefits, managing microservices can be challenging, particularly as the number of services grows. Kubernetes provides a solution to this challenge. Continue reading to learn how to manage Microservices with Kubernetes.

A container is a compact bundle containing all the essential components of an application, like the code, tools, and settings. It helps to deploy and expand applications effortlessly. Moreover, containers offer a dependable and segregated environment for applications to run, no matter the infrastructure used. Microservices is an architectural style that has revolutionized software development, allowing us to break down complex problems into smaller, more manageable chunks. This method consists of several independent services communicating through APIs, which creates a highly efficient application architecture. In practice, microservices are often deployed in containers. This is because containers provide the isolation and consistency needed for microservices to run independently and communicate with one another. However, containerization is one of many ways to implement microservices. We can also deploy microservices on virtual machines or bare metal servers. In summary, containers are a way to package and distribute software, whereas microservices are an architectural pattern for building software applications. If you want to use containers to deploy and manage microservices, Kubernetes is a popular choice. Let’s learn why in the next section. Benefits of Using Kubernetes for MicroservicesKubernetes is the perfect tool to facilitate and govern microservices thanks to its ability to seamlessly deploy and manage containerized applications. Here are some benefits of using

- Scalability

Kubernetes makes it easy to scale services up or down as needed. This eliminates manual scaling and allows respond to changing demands.

- High availability

Kubernetes offers built-in high availability features, ensuring services remain available even during failure or network disruption.

- Dynamic resource allocation

Kubernetes can dynamically allocate resources based on demand, enabling more efficient resource utilization and cost savings.

- Self-healing

Kubernetes can detect and replace failed services, helping maintain uptime and reliability.

Before deploying your microservices, you need to set up a Kubernetes Cluster. A cluster is a group of nodes that run the Kubernetes control plane and the container runtime. There are many ways to set up a cluster, including.

- Using Managed Services like Google Kubernetes Engine (GKE) or Amazon Elastic Container Service for Kubernetes (EKS)

- Installing Kubernetes on your own infrastructure by creating nodes (virtual or physical machines) and connecting them to the master node

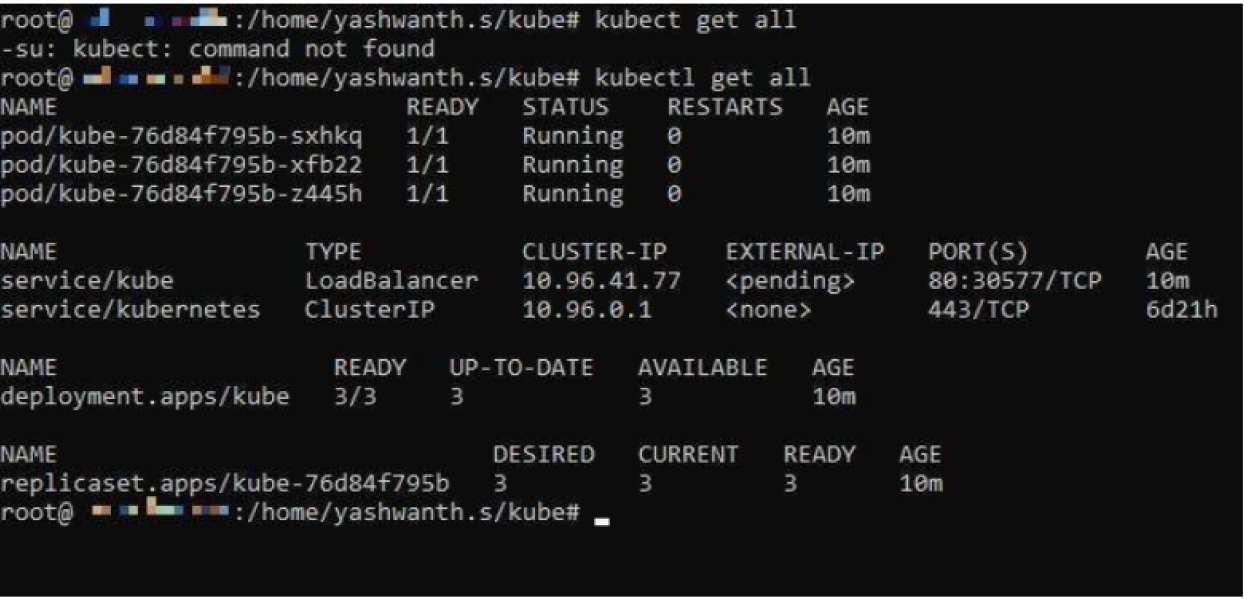

You can use the Kubernetes command line interface (CLI) to manage your cluster. The Kubernetes CLI, also known as kubectl, is a powerful tool for managing Kubernetes clusters. You can use it to deploy and manage applications on the cluster, inspect its state, and debug any issues that may arise. To use Kubectl, you must first install it on your computer. You can find instructions to install Kubectl here. Once you’ve installed Kubectl, you can access the CLI by simply typing Kubectl in your terminal. You can also check that it has been successfully installed by running the following command: kubectl version. This should return the version of the Kubernetes CLI currently installed on your machine (as shown in the screenshot below). Once you’ve completed the installation, there are a few basic commands that you should be familiar with. To view your current clusters and nodes, use the following command: kubectl get nodes. This will list all of the nodes in your cluster and their status. To view more detailed information about a node, use the following command: kubectl describe node This will provide you with more detail, such as the IP address and hostname of the node. You can also use kubectl to deploy applications on your cluster. You’ll need to create a configuration file for your application to do this. This configuration file should include details such as the number of replicas and the image to use for the pods. Now, let’s look at how to deploy a simple microservice application in Kubernetes.

For this, you’ll need to create a project in Node.js by running the following command: npm init -y.

To create a basic microservice, it is necessary to install the Express package. To achieve this, execute the below command: npm install express — save.

Below is a sample code for a simple microservice in Node.js.

Create the docker image using the command

Write a Kubernetes manifest file to deploy this application in Kubernetes

After that, scale up the deployed services per your requirements using the command kubectl scale deployment.

my-web-service –replicas=

Here is an image of successfully deploying and scaling the application using Kubernetes!

Kubernetes has become the go-to solution for managing microservices due to its flexibility, scalability, and reliability. With powerful features and tools like the Kubernetes CLI (kubectl), managing microservices has always been challenging. By setting up a Kubernetes cluster, you can ensure that your microservices are running smoothly and efficiently while being able to easily monitor, troubleshoot, and scale your applications as needed. Whether you are a developer or an operations specialist, Kubernetes provides a platform to help you effectively manage your microservices and simplify your workflow. Talk to our experts if you are considering managing microservices, to understand if Kubernetes is definitely worth exploring for you.

Talk to our solutions expert today.

Our digital world changes every day, every minute, and every second - stay updated.